The Book of Job ends with God’s description of Leviathan. George Dyson begins his book Darwin Among the Machines with the Leviathan of Thomas Hobbes (1588-1679), the English philosopher whose famous 1651 book Leviathan established the foundation for most modern Western political philosophy.

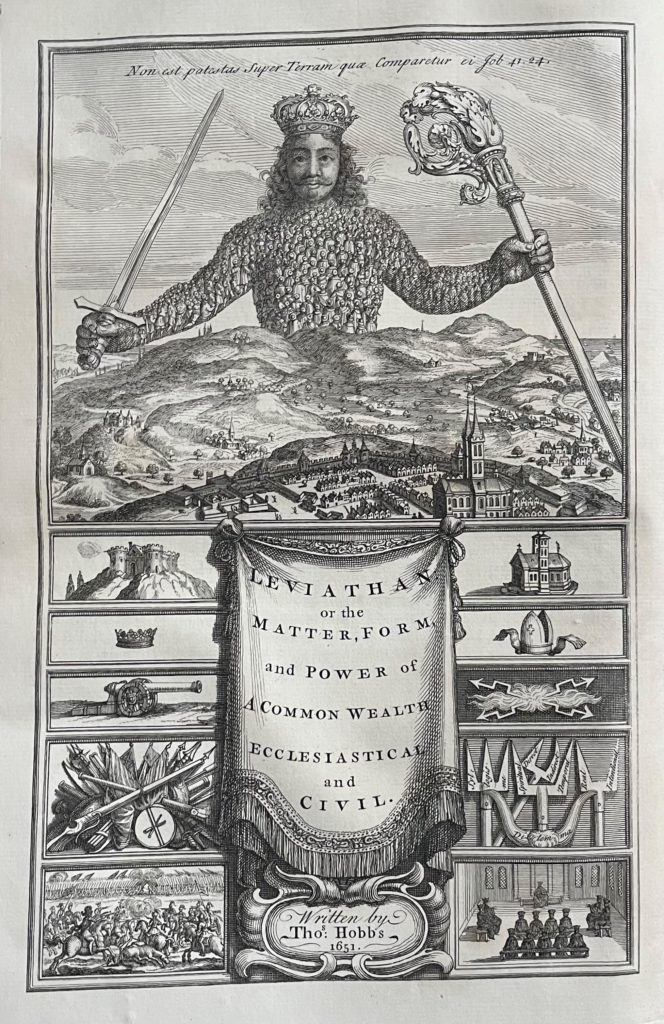

Leviathan’s frontispiece features an etching by a Parisian illustrator named Abraham Bosse. A giant crowned figure towers over the earth clutching a sword and a crosier. The figure’s torso and arms are composed of several hundred people. All face inward. A quote from the Book of Job runs in Latin along the top of the etching: “Non est potestas Super Terram quae Comparetur ei” (“There is no power on earth to be compared to him”).” (Although the passage is listed on the frontispiece as Job 41:24, in modern English translations of the Bible, it would be Job 41:33.)

The name “Leviathan” is derived from the Hebrew word for “sea monster.” A creature by that name appears in the Book of Psalms, the Book of Isaiah, and the Book of Job in the Old Testament. It also appears in apocrypha like the Book of Enoch. See Psalms 74 & 104, Isaiah 27, and Job 41:1-8.

Hobbes proposes that the natural state of humanity is anarchy — a veritable “war of all against all,” he says — where force rules and the strong dominate the weak. “Leviathan” serves as a metaphor for an ideal government erected in opposition to this state — one where a supreme sovereign exercises authority to guarantee security for the members of a commonwealth.

“Hobbes’s initial discussion of Leviathan relates to our course theme,” explains Caius, “since he likens it to an ‘Artificial Man.’”

Hobbes’s metaphor is a classic one: the metaphor of the “Political Body” or “body politic.” The “body politic” is a polity — such as a city, realm, or state — considered metaphorically as a physical body. This image originates in ancient Greek philosophy, and the term is derived from the Medieval Latin “corpus politicum.”

When Hobbes reimagines the body politic as an “Artificial Man,” he means “artificial” in the sense that humans have generated it through an act of artifice. Leviathan is a thing we’ve crafted in imitation of the kinds of organic bodies found in nature. More precisely, it’s modeled after the greatest of nature’s creations: i.e., the human form.

Indeed, Hobbes seems to have in mind here a kind of Automaton.“For seeing life is but a motion of Limbs,” he notes in the book’s intro, “why may we not say that all Automata (Engines that move themselves by springs and wheeles as doth a watch) have an artificiall life?” (9).

“What might Hobbes have had in mind with this reference to Automata?” asks Caius. “What kinds of Automata existed in 1651?”

An automaton, he reminds students, is a self-operating machine. Cuckoo clocks would be one example.

The oldest known automata were sacred statues of ancient Egypt and ancient Greece. During the early modern period, these legendary statues were said to possess the magical ability to answer questions put to them.

Greek mythology includes many examples of automata: Hephaestus created automata for his workshop; Talos was an artificial man made of bronze; Aristotle claims that Daedalus used quicksilver to make his wooden statue of Aphrodite move. There was also the famous Antikythera mechanism, the first known analogue computer.

The Renaissance witnessed a revival of interest in automata. Hydraulic and pneumatic automata were created for gardens. The French philosopher Rene Descartes, a contemporary of Hobbes, suggested that the bodies of animals are nothing more than complex machines. Mechanical toys also became objects of interest during this period.

The Mechanical Turk wasn’t constructed until 1770.

Caius and his students bring ChatGPT into the conversation. Students break into groups to devise prompts together. They then supply these to ChatGPT and discuss the results. Caius frames the exercise as a way of illustrating the idea of “collective” or “social” or “group” intelligence, also known as the “wisdom of the crowd,” i.e., the collective opinion of a diverse group of individuals, as opposed to that of a single expert. The idea is that the aggregate that emerges from collaboration or group effort amounts to more than the sum of its parts.