The ancient Greeks imagined Tartaros as a pit, an anti-sky, an inverted dome beneath the earth. According to the Orphics and the mystery schools, however, Tartaros is not just a place housing criminals and monsters; rather, it is itself a kind of being: “the un-bounded first-existing entity from which the Light and the Cosmos are born.” Typhon, meanwhile, is this entity’s progeny; Typhon is the son of Tartaros and Gaia. He was the last god to challenge the supremacy of Zeus. When defeated by the latter’s thunderbolts, he was cast back into the pit from whence he came.

Readings of “MAXIMUS, FROM DOGTOWN—IV” hinge upon what one makes of the father chained in Tartaros. Grieve-Carlson entertains an interpretation different from the one I offer. In his view, “the father chained in Tartaros is not Typhon but rather Kronos, Zeus’s father. […]. Typhon appears much later in the poem, when Earth conceives him in an ‘act of love’ with Tartaros” (Grieve-Carlson 146). He argues that Olson re-tells the myth of Typhon just as Hesiod did. Typhon is violent and aggressive and would have become a tyrant over gods and men had Zeus not defeated him.

I think there’s more at stake here, however, than Grieve-Carlson lets on. As I see it, the problem with his reading is that he never grapples with the poem’s status as a letter of sorts mailed to the Psychedelic Review. Neither he nor the other critics he surveys ever address how the poem might be read in light of the circumstances of its publication.

Evidence to support my view appears elsewhere in Olson’s writings. Olson echoes in his “Letter to Elaine Feinstein” of May 1959, for instance, the same Zeus / Typhon battle that comes to occupy him in “MAXIMUS, FROM DOGTOWN—IV.” The ultimate “content” to which the poet gives form, sez Olson, is “multiplicity: originally, and repetitively, chaos—Tiamat: wot the Hindo-Europeans knocked out by giving the Old Man (Juice himself) all the lightning” (29). Hearing “Juice” as a homonym for “Zeus,” we find in Tiamat a twin for Typhon. While Tiamat was for ancient Mesopotamians a primordial goddess of the sea, and Typhon a monstrous serpent-god for the ancient Greeks, both are embodiments of chaos. Tiamat’s battle with Marduk is as much a version of ChaosKampf as is Typhon’s battle with Zeus.

The important point is that, for Olson, Chaos is the original condition of existence. It precedes Order. Order is formed — made, not found — and it is the duty of the poet to make it. This is what Olson hoped to communicate to the mushroom people.

Grieve-Carlson concludes his essay by describing the reading of The Maximus Poems as a form of “initiation,” as Olson writes as one initiated, one able to see and say in a special way. Olson makes use of a “metanastic poetics,” or “the technique of the mystic who returns, as a stranger in his own land, to tell about what he knows” (Martin, as quoted in Grieve-Carlson 148).

This reference to reading The Maximus Poems as a form of “initiation” intrigues me, as the writer other than Olson most closely associated with reinvention of Typhon is the British ceremonial magician Kenneth Grant (1924-2011). The latter led the Typhonian Ordo Templi Orientis (TOTO), a magical organization connected with Aleister Crowley’s Thelema religion. Grant was an apprentice of Crowley’s and a close friend of another famous twentieth-century occultist, Austin Osman Spare. Scholars like Henrik Bogdan refer to the occult current that springs from Grant as the “Typhonian tradition.” Grant announced the arrival of this tradition in 1973 and went on to write the nine books of his three Typhonian Trilogies.

Although influenced by Crowley and Thelema, Grant departs from other Thelemic currents by welcoming communication with “extraterrestrial entities” as a valid source of occult knowledge. The Typhonian tradition also embraces aspects of the Cthulhu mythos of horror writer H.P. Lovecraft.

While Grant’s announcement succeeds Olson’s poem by a decade, his ideas appear to have been informed by experiences not unlike Olson’s. Grant experimented with psychedelics in the 1960s, and included a chapter in his 1972 book The Magical Revival titled “Drugs and the Occult.”

And while I haven’t found any evidence suggesting that Grant knew anything of Olson’s work, Olson did have some interest in gnosticism and the occult. “Bridge-Work,” a short reading list of Olson’s dated “March, 1961” includes a reference to Crowley. Maud says Olson encountered Crowley’s The Book of Thoth (1944) while studying Tarot in the 1940s. Sources suggest that “Bridge-Work” was written with the help of Olson’s friend, Boston-based occult poet Gerrit Lansing. The copy of The Book of Thoth read by Olson probably belonged to Lansing. (See Division Leap’s A Catalog of Books From the Collection of Gerrit Lansing.) Olson was also deeply invested in Gnosticism in the years immediately before and after his sessions with Leary, and embraced Jung’s theory of synchronicity in the wake of those sessions. See the final essays in a volume of Olson’s called Proprioception (Four Seasons, 1965).

Grant’s innovation is to identify “the arch-monster Typhon, opponent to Zeus according to the Greek mythology…with the Egyptian goddess Taurt” (Bogdan 326). The latter is interpreted by Grant to be either the mother of Set or a feminine aspect of Set. “To Grant,” writes Bogdan, “the worship of Taurt or Typhon represented the oldest form of religion known to mankind, a religion centered on the worship of the stars and the sacred powers of procreation and sexuality” (Bogdan 326). Set, too, is an important figure in Grant’s system. “Grant maintained,” writes Bogdan, “that the Typhonian Tradition, and in particular the god Set, represents the ‘hidden,’ ‘concealed’ or repressed aspect of our psyche which it is vital to explore in order to reach gnosis or spiritual enlightenment” (Bogdan 326).

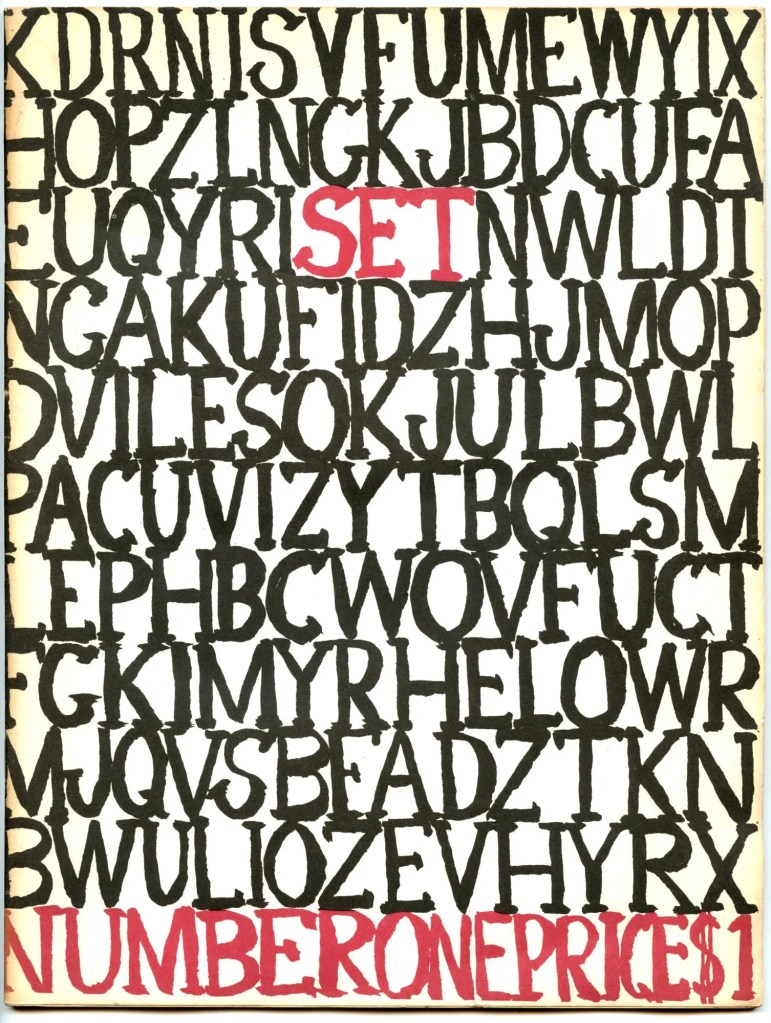

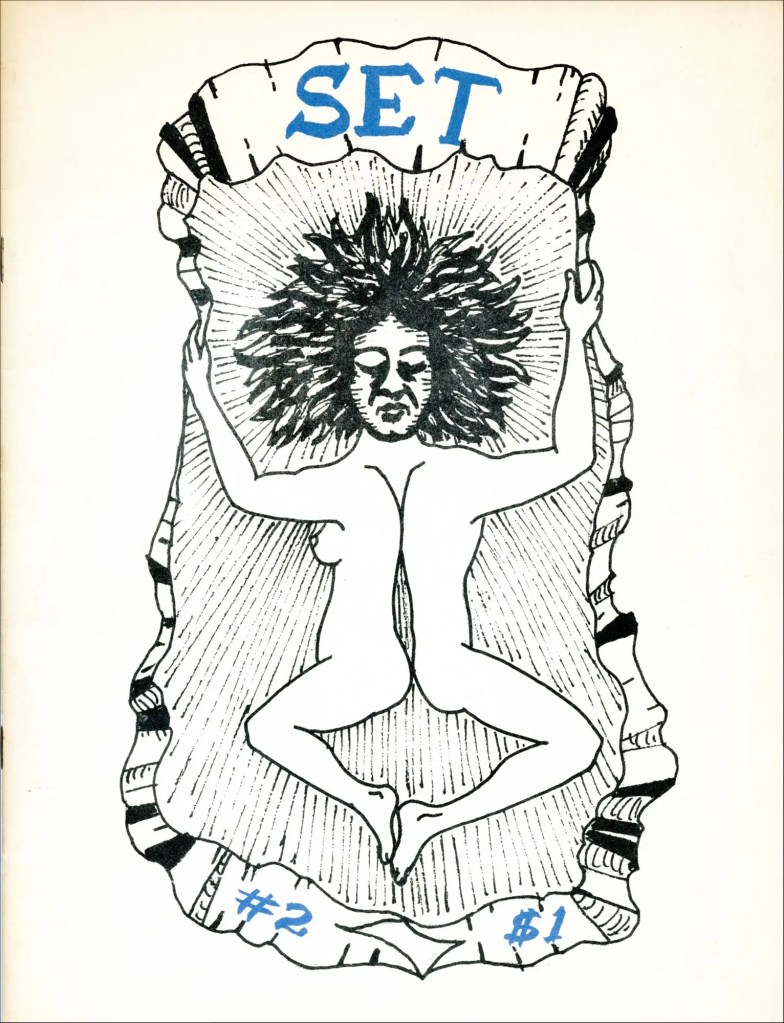

Lansing’s work seems to anticipate Grant’s in several ways. The two both think it important to honor the Egyptian god Set, for instance, with Lansing naming his early-60s poetry journal SET after him. And Grant’s focus on the Qliphoth, or the underground portion of the Tree of Life, seems present in the title of Lansing’s 1966 poetry collection The Heavenly Tree Grows Downward. For more on the “tree that grows downward,” Pierre Joris recommends looking at a section of Jung’s Alchemical Studies called “The Inverted Tree.”